|

Size: 10603

Comment:

|

Size: 10643

Comment:

|

| Deletions are marked like this. | Additions are marked like this. |

| Line 9: | Line 9: |

| {{{ | {{{#!sagecell |

| Line 132: | Line 132: |

| {{{ | {{{#!sagecell |

| Line 187: | Line 187: |

| {{{ | {{{#!sagecell |

| Line 227: | Line 227: |

| {{{ | {{{#!sagecell |

Sage Interactions - Web applications

goto interact main page

Contents

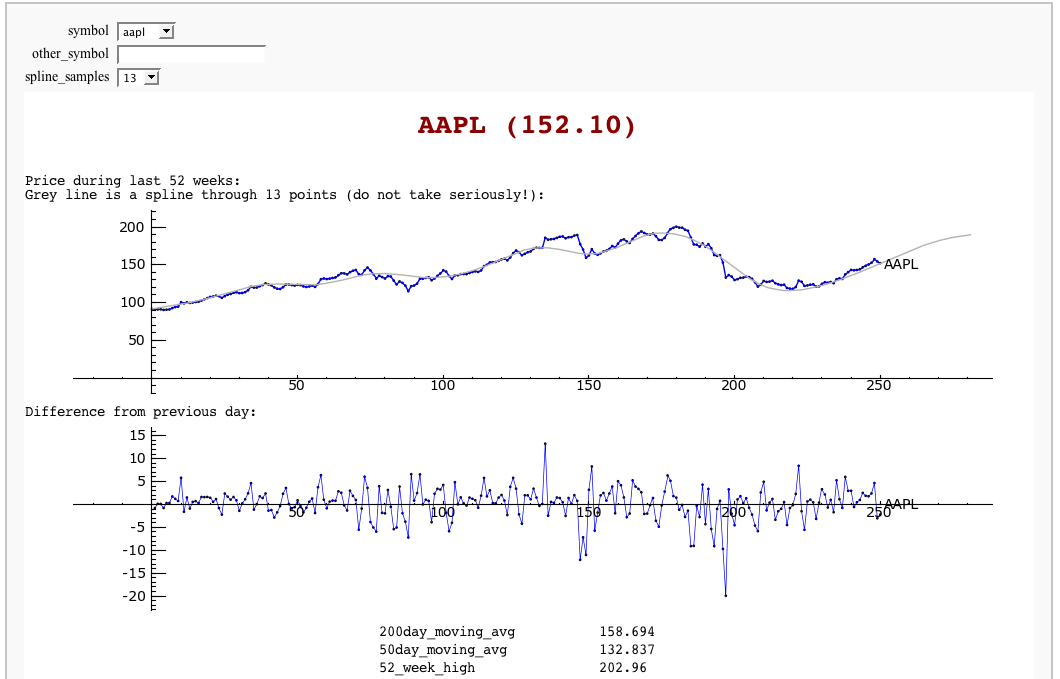

Stock Market data, fetched from Yahoo and Google

by William Stein

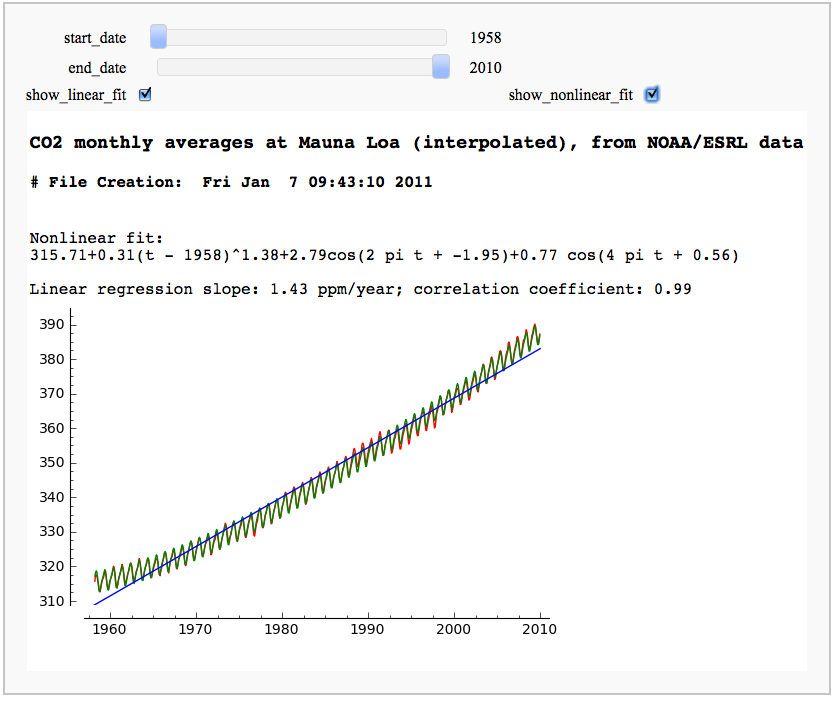

CO2 data plot, fetched from NOAA

by Marshall Hampton

While support for R is rapidly improving, scipy.stats has a lot of useful stuff too. This only scratches the surface.

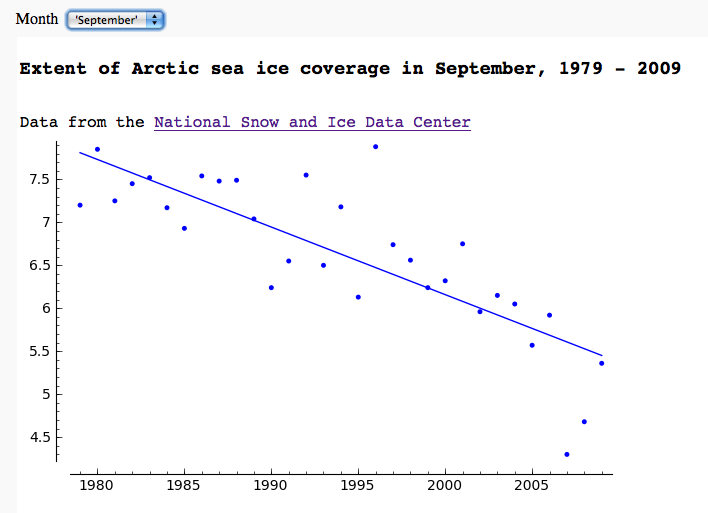

Arctic sea ice extent data plot, fetched from NSIDC

by Marshall Hampton

Pie Chart from the Google Chart API

by Harald Schilly